- Have any questions?

- +91 99908 16780

- info@ai-cbse.com

A Beginner’s Guide to Variational Autoencoders (VAE) for Students

How ChemXploreML Makes Chemical Predictions Easier with Machine Learning – No Programming Skills Required

July 25, 2025What is a Neural Network?

Before we get into Variational Autoencoders (VAE), it’s essential to understand what a neural network is. Think of it as a computer brain that helps machines learn from data. Just like our brain has neurons to process information, a neural network has artificial neurons that help in tasks like pattern recognition, decision-making, and learning from experience.

Neural networks are widely used in machine learning, and they power applications such as:

- Image recognition

- Natural language processing

- Speech recognition

- Recommendation systems

What is an Autoencoder?

To understand VAE, we first need to know about autoencoders. An autoencoder is a type of neural network that learns to compress and reconstruct data. In simple terms, an autoencoder takes complex data (like an image or sound), compresses it into a smaller, more manageable form, and then reconstructs the original data from that compressed version.

The process works in two main stages:

- Encoder: Compresses the input data into a smaller format (latent space).

- Decoder: Reconstructs the original data from the compressed version.

Autoencoders are useful for:

a. Dimensionality reduction for data analysis

b. Data compression

c. Image noise reduction

What Makes Variational Autoencoders (VAE) Special?

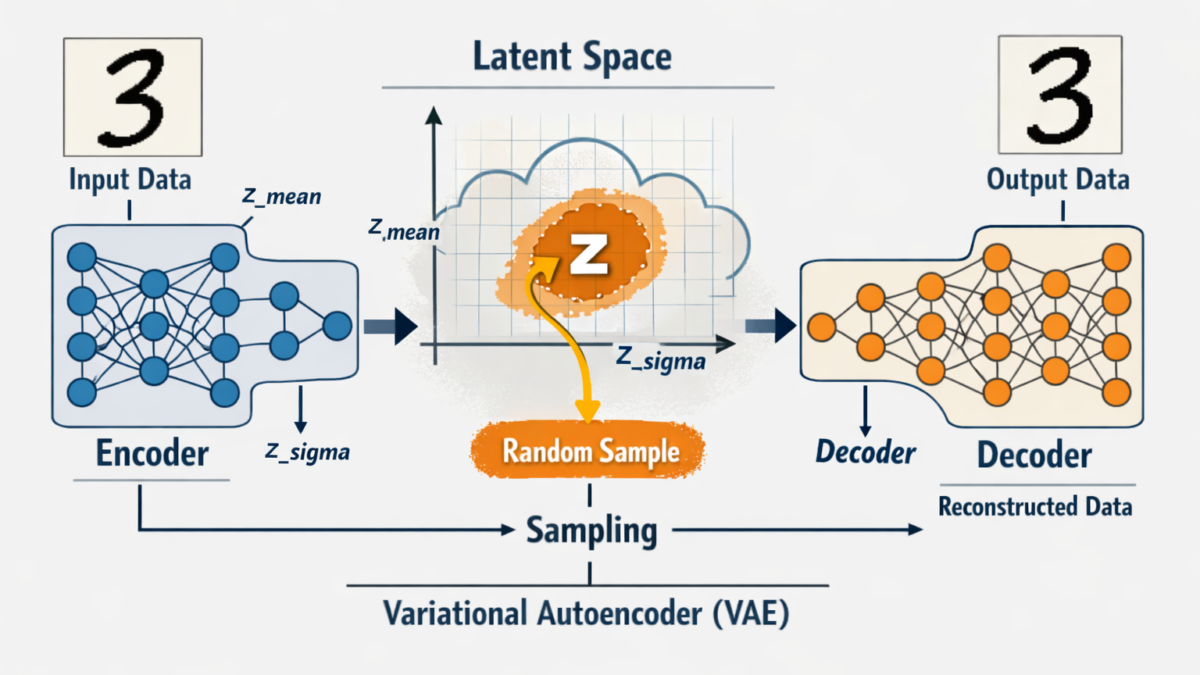

A Variational Autoencoder (VAE) is an advanced version of a regular autoencoder that introduces some important changes. While a traditional autoencoder just compresses data into a smaller representation, a VAE learns to create new data based on the patterns it sees. It doesn’t just memorize the data—it generalizes the features and can even generate new, realistic data.

Here’s how VAE works and what makes it stand out:

1. Latent Space as a Probability Distribution

In a regular autoencoder, the encoder compresses data into a single point in the latent space (the compressed version). But in Variational Autoencoders, the encoder doesn’t just output a point. Instead, it creates a probability distribution over the latent space.

This means that instead of encoding data into one fixed point, VAEs learn a range of possible values. This added flexibility allows for better data generation and exploration of different possibilities.

2. Sampling to Create New Data

Once the VAE has learned a probability distribution in the latent space, it can sample from this distribution to generate new data. For example, if the VAE is trained on images of faces, it can generate new, never-seen-before faces by sampling from the latent space.

In other words, VAEs not only learn to compress data but also generate new examples that look similar to the data it was trained on. This ability to create new, realistic data makes VAEs powerful for applications like image generation and data augmentation.

3. KL Divergence for Smooth Latent Space

One challenge of using a probability distribution in the latent space is that it could become messy. VAEs solve this problem by introducing a concept called KL Divergence. KL Divergence helps to regularize the latent space, making sure the points are close together and easy to sample from.

In simple terms, KL Divergence helps the model keep the latent space organized and smooth, so that it can generate high-quality data that makes sense.

4. Loss Function in VAEs

A key part of training any neural network is the loss function. The loss function helps the model learn by indicating how far it is from the desired output. For VAEs, the loss function is a combination of:

- Reconstruction Loss: Measures how accurately the decoder can reconstruct the input data from the latent space.

- KL Divergence Loss: Ensures the latent space stays smooth and well-behaved.

By combining these two, the VAE can efficiently compress data and generate realistic new data at the same time.

How Does a VAE Work?

Let’s break it down step by step using a simple example:

- Input Data: Let’s say you have a collection of images of cats.

- Encoder: The encoder compresses each cat image into a smaller, abstract form, but instead of a single point, it creates a probability distribution of possible representations.

- Sampling: From this distribution, the model samples a point (a specific compressed version of the image).

- Decoder: The decoder takes this point and reconstructs an image of a cat.

- Training: The VAE is trained to minimize both the reconstruction loss (how well the generated cat looks like the original) and the KL Divergence loss (keeping the latent space organized).

Applications of Variational Autoencoders (VAE)

Variational Autoencoders (VAE) have numerous applications in the field of machine learning and artificial intelligence. Some of the most common uses include:

Data Augmentation: In applications like computer vision or natural language processing, VAEs can create synthetic data that can help improve the performance of machine learning models by providing more diverse examples.

Generating Realistic Images: VAEs can generate entirely new images that look like they belong to the same dataset. For example, generating new faces or landscapes.

Creating New Music or Sounds: VAEs can learn patterns from audio data and generate new pieces of music or sound effects.

Anomaly Detection: Since VAEs learn the normal patterns in data, they can be used to identify outliers or anomalies, such as fraud detection in banking or spotting abnormalities in medical scans.

Why are VAEs Important?

The ability of VAEs to generate new data and learn a probabilistic representation of the data makes them incredibly useful. They combine the best of both worlds: compression and generation. With VAEs, you’re not just able to compress and reconstruct data—you’re also able to create entirely new instances of that data.

Closure

Variational Autoencoders (VAE) are a powerful and flexible tool in the world of machine learning. They go beyond simple data compression by learning probabilistic representations of data, allowing them to generate new, realistic data. Whether you’re working with images, audio, or even text, VAEs are a key technology in fields like artificial intelligence, data generation, and anomaly detection.